RAG vs. Context-Window in GPT-4: accuracy, cost, & latency

TL;DR: (RAG + GPT-4) delivers superior performance, at 4% of the cost.

TL;DR: (RAG + GPT-4) beats the context window on performance, at 4% of the cost.

Introduction

The next stage of LLMs in production is all about making responses hyper-specific: to a dataset, to a user, to a use-case, even to a specific invocation.

This is typically achieved using one of 3 basic techniques:

Context-window-stuffing

RAG (Retrieval Augmented Generation)

Fine-tuning.

(If none of these mean anything to you - consider subscribing to the newsletter - I will cover each qualitatively in the next post in the series.)

As practitioners know, and contrary to hype (“A GPT trained on your data..!”), it’s context-window-stuffing and RAG (not fine-tuning) which are predominantly used to specialize an LLM’s responses. (Fine-tuning plays its own important & unique role - which I will cover in my next blog post).

I recently added a new document-oriented react hook to CopilotKit, made specifically to accommodate (potentially long-form) documents.

To help choose sane defaults (and inspired by Greg Kamradt’s comparison of GPT-4 vs. Claude 2.1) I carried out a “needle in a haystack” pressure-test of RAG against GPT-4-Turbo’s context-window, across 3 key metrics:

(1) accuracy, (2) cost, and (3) latency.

Furthermore, I benchmarked 2 distinct RAG pipelines:

Llama-Index - the most popular open-source RAG framework (default settings).

OpenAI’s new assistant API’s retrievals tool — which uses RAG under the hood (and has been shown to use the Qdrant vector DB).

Results

To start at the end, here are the results:

TL;DR: Modern RAG works really, really well. Depending on your usecase, quite you may never want to stuff the context window anywhere near its capacity (at least when dealing with text).

(1) accuracy

As seen in the graph above, the assistants api’s (GPT-4 + RAG) knocks it out of the park, with near perfect performance.

Note- this performance only pertains to search-style queries. There are other use-cases for large context-windows (e.g. few-shot learning).

(2) cost

Context-window stuffing incurs only a per-token cost, while RAG incurs a per-token cost as well as an additional ~fixed LLM reasoning cost.

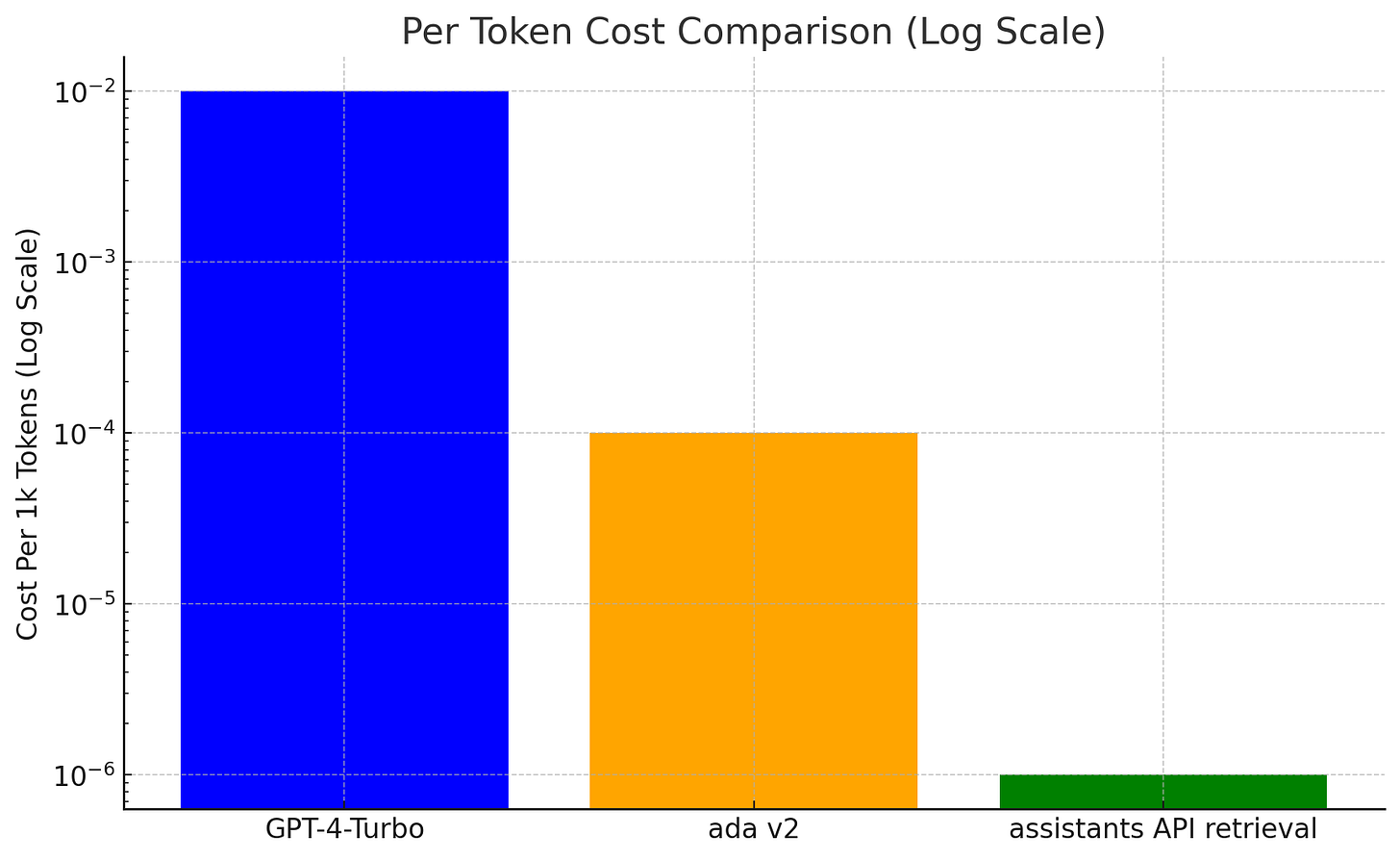

Here are the per-token costs:

In case you missed it, the spread is across 4 orders of magnitude (log scale).

(See figures & calculations in the analysis section).

*Note, OpenAI is not charging for retrieval at all until mid-January 2024 - but the prices are published, and that’s what I used in calculations.

It’s possible the extra-reduced price vs. ada V2 is some communication gaffe that OpenAI will correct (e.g. perhaps they’ve failed to mention that they pass-on the embeddings cost in addition to indexing cost). Another possibility: they’re using a new cheaper (and ostensibly, better) embeddings model which has yet to be published.

—

But again, RAG also incurs a ~fixed LLM agent loop cost.

For 128k context window, the average total cost came to roughly $0.0004/ 1k-tokens, or 4% of the GPT-4-Turbo cost.

The LlamaIndex cost was slightly cheaper, but comparable at $0.00028/1k tokens (due to a less sophisticated agentic loop).

(3) Latency

Usually RAG is carried out against offline data, where retrieval latency is measured in milliseconds, and end-to-end latency is dominated by the LLM call.

But I thought it’d be interesting to compare end-to-end latencies, from document upload to getting back the result - to see if RAG could be competitive with “online” (rather than offline) data. Short answer: Yes!

Below are end-to-end latencies for queries on 128k token documents:

LlamaIndex RAG came in lowest at 12.9s on average.

GPT4-Turbo came in next at 21.6s on average - but with a wide spread of 7-36s.

The assistant API RAG retrieval came in at 24.8s

Furthermore, most applications can benefit from optimistic document uploading to minimize perceived latency. With RAG indexing costs being so low, there’s often not much to lose.

Methodology

I’ve built on the excellent work of Greg Kamradt who recently published “needle in a haystack” pressure-testing of GPT-4-Turbo and Claude 2.1.

Essentially, we take a “haystack”, and hide somewhere inside it a “needle”. Then we interrogate the system about the needle. I put the needle at different positions within the haystack - from the very start, to the end, in ~10% increments.

In the context-window-stuffing experiments, I simply pushed the “haystack” onto the LLM call context window. In the RAG experiments, I created a single document and performed RAG on it.

(As in Greg’s excellent analysis, the “haystack” was a collection of Paul Graham essays, and the needle was an unrelated factoid.)

Analysis

(1) Accuracy

GPT-4 + RAG performed extremely well.

This is not entirely surprising. Putting irrelevant information in the LLM context window is not just expensive, it’s actively harmful to performance. Less junk = better results.

These results highlight how early we still are into the LLM revolution. The wider community is still figuring out the most sensible ways to compose the new LLM building blocks together. It’s entirely possible that the context-window wars of the past year will end on an uneventful note — with the understanding that increasingly-sophisticated RAG-based techniques, rather than larger context windows, are key (at least for text).

LlamaIndex

I’d expected RAG to perform more or less identically as the context window increased. But it did not — there was a clear degradation past a ~100k context length. My guess is past a certain context size, the needle was no longer fetched by the retrieval process. I plan to confirm this by debugging a little under the hood (perhaps for the next article).

Different chunking and retrieval configurations could impact this result. But I purposefully benchmarked only the defaults.

Overall I’m very bullish on LlamaIndex and Open-Source LLM tech. It’s clear RAG is still very much in low-hanging-fruit territory, and simplifying frameworks are key. Llama-Index is well-positioned to continue incorporating new techniques & best practices as they emerge.

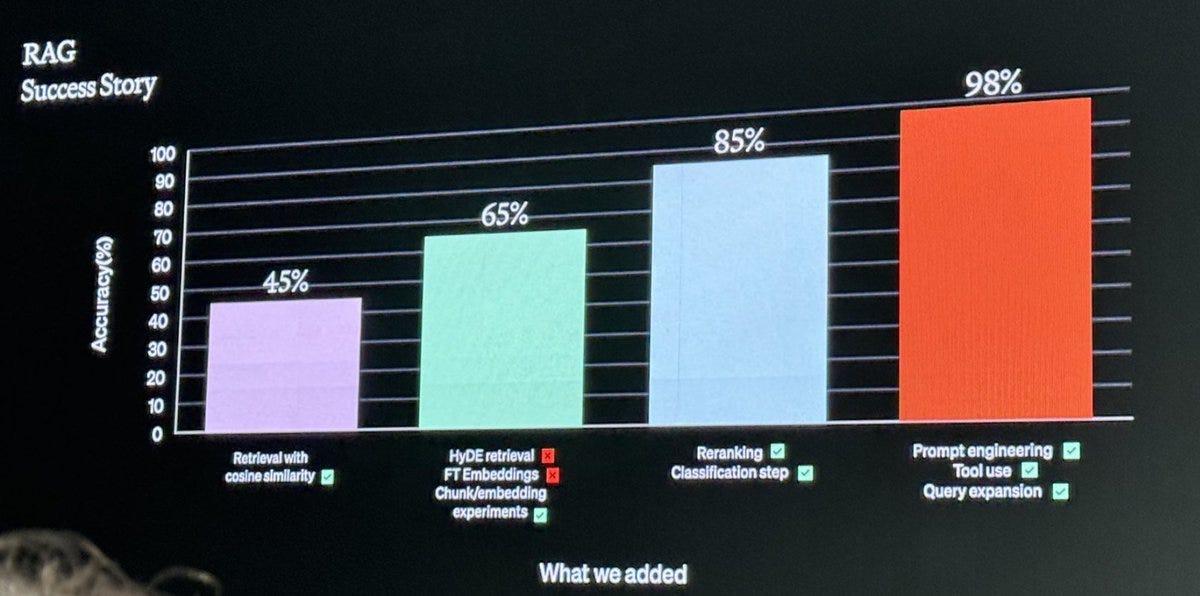

This leaked OpenAI’s DevDay slide gives some inspiration:

(2) Cost

RAG cost analysis is a little subtle because it’s only partially deterministic.

The first part of RAG is Retrieval - the most “promising” document chunks are chosen from the wider dataset according to some heuristic (typically vector search). The second part is Generation Augmentation - the chosen chunks are fed into a “standard” LLM call (and with increasing commonality, to an agentic LLM loop - as is done in OpenAI’s retrievals).

In principle, retrieval can be achieved using lots of techniques, from keyword search, to relational search, to hybrid techniques. In practice, most contemporary RAG approaches use predominantly vector search - which incurs a one-time, per-token indexing cost. As the ecosystem matures, hybrid techniques will likely be increasingly used.

Per-token costs

Let’s first look at the per-token costs across the board:

GPT-4-Turbo charges input tokens at $0.01/1k tokens.

(That’s a 3x and 6x price reduction, respectively, from GPT-4 and GPT-4-32k).OpenAI’s ada v2 embeddings model charges $0.0001 / 1k tokens.

That’s 100x cheaper than GPT-4-Turbo.OpenAI’s assistant API’s retrieval capability is even more aggressively priced. It’s charged on a “serverless” basis at $0.20 / GB / assistant / day. Assuming 1 token ~ 5 bytes, That’s $1 × 10^-6 dollars / 1k tokens / assistant / day.

Or 100x cheaper than ada v2, and 10,000x cheaper than GPT-4 input tokens.

Fixed overhead

The overhead part is difficult to calculate (or impossible, in OpenAI’s case), so I simply measured it empirically.

As given in the results section, RAG also incurs a fixed overhead, derived from the LLM reasoning step. With a 128k context, this fixed cost comes to 4% of the GPT-4 context window.

Quick back-of-the-envelope math says that from a cost perspective, it may only be cost-effective to use context-stuffing below a 5k context window.

(3) Latency.

In principle, embedding computations are highly parallelizable. Therefore given market demand, future infra improvements will likely bring latency down essentially to the round-trip of a single chunk embedding.

In such a case we’ll see even “online” RAG pipeline latencies shrink down considerably, to the point where “online” RAG latency is dominated only by the latency of the LLM chain of thought loop.

—

The end.

Exciting days ahead.

If you’re building a Copilot into your application, interested in contributing, or in evolving the open copilot protocol, make sure to join the GitHub / Discord!